Logistic regression is a statistical method used to model the probability of a certain outcome or event occurring based on a set of input variables. It is mostly used in classification tasks. Then why is it called regression? Because It doesn’t predict a class; rather, it predicts the probability of a data point belonging to a class.

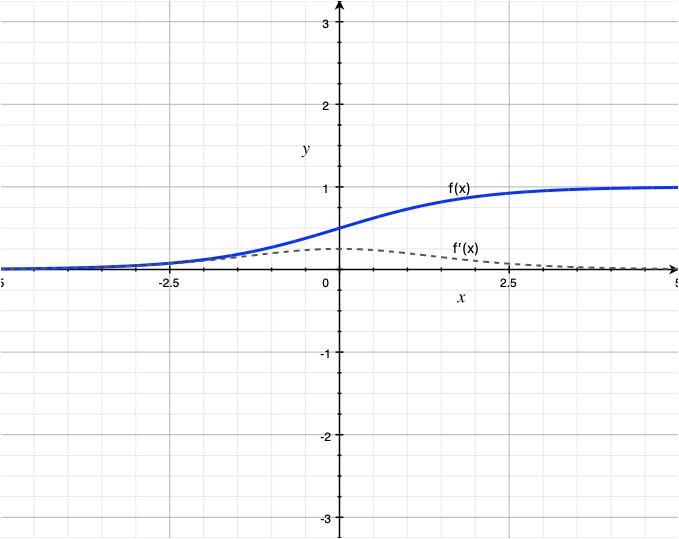

Hypothesis function

![]()

where:

![]()

this function is called the sigmoid function or logistic function.

To better comprehend the hypothesis function of logistic regression, let’s break it down. The term θ^Tx, which is analogous to linear regression, represents the weighted sum of the input variables. However, in logistic regression, the sigmoid function is applied to this sum to transform it into a suitable form.

The sigmoid function plays a crucial role in logistic regression by squashing the output to a range between 0 and 1, allowing it to represent a probability.

Cost function

![Rendered by QuickLaTeX.com \[BCE = -\frac{1}{n}\sum_{i=1}^{n}Cost(\hat{y}, y) = -\frac{1}{n}\sum_{i=1}^{n}y_{i}log(\hat{y_{i}}) + (1 - y_{i})log(1 - \hat{y_{i}})\]](https://akashnotes.com/wp-content/ql-cache/quicklatex.com-8cb5db7dff408c4abe88d676967e7233_l3.png)

The cost function in logistic regression, also known as the log loss or binary cross-entropy loss, is used to evaluate the model’s performance and determine how well it predicts the binary outcomes. The goal is to minimize the cost function to obtain the optimal set of model parameters.

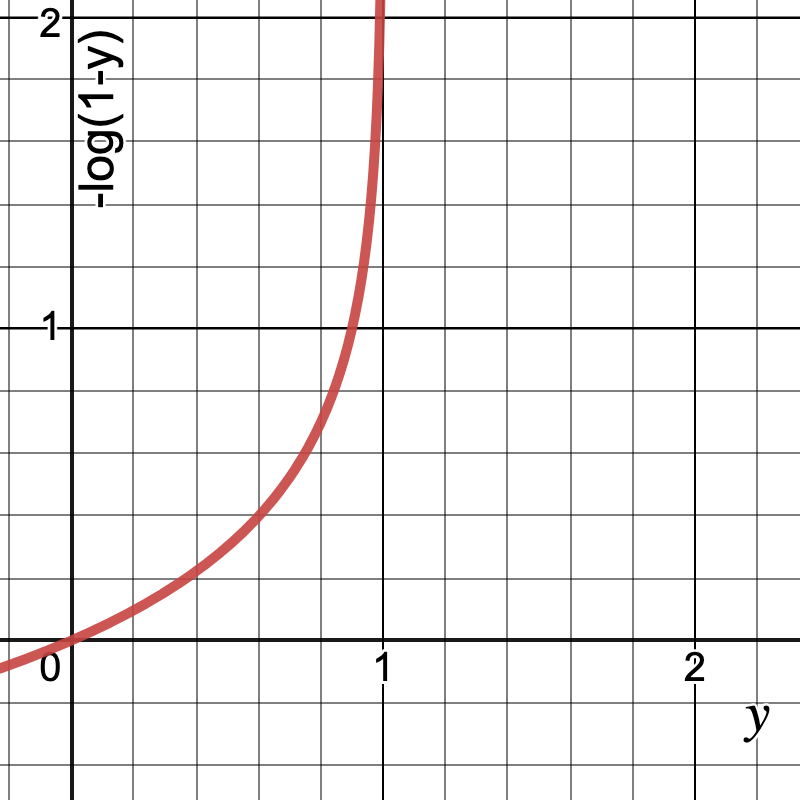

Let us break down and understand the cost function.

![Rendered by QuickLaTeX.com \[Cost(y) =\begin{cases}-log(\hat{y_{i}})& \text{ if } y= 1\\-log(1 - \hat{y_{i}})& \text{ if } y= 0\end{cases}\]](https://akashnotes.com/wp-content/ql-cache/quicklatex.com-b550f1e27af145b99b730bdecf6b9543_l3.png)

Plotting the graph for the above equation:

Optimizers

The optimizer is the same as in linear regression i.e. we use gradient descent optimizer for most of our algorithms. To know more about optimizers you can refer to: